The (revised) Future of AI in Game Testing

With all the hype surrounding offerings such as ChatGPT, Midjourney, Stable Diffusion, and search offerings from Google and Microsoft, it's no wonder many people are looking to AI as a potential solution for what might be considered a mundane task. Many developers I have spoken to are worrying that AI may put people out of a job, so we felt it necessary to revisit this topic and shed some light on the impact that AI will have on game testing.

If you are unfamiliar with the impact that machine learning and AI have had on our daily lives, look no further than Gmail, Grammarly, or Google Docs, and you can see the almost prescient prediction when writing emails, documents, searches, and more. These solutions have been trained on our every action for over a decade, and the latest entry from OpenAI, ChatGPT, is similar in scale if not in scope. Where the aforementioned basic tools are useful in predicting what we’re going to write based on the current context, ChatGPT is proving to be useful for a much broader context. It’s amazing to see ChatGPT answer general questions or write something based on user prompts, but it isn't magic. This is the result of training algorithms on billions and billions of examples from people. The same is true with GitHub Copilot, a feature that will not only write code for you given a minimal set of parameters, but it can seemingly predict what you need to write based on what you have written, and can even write fully featured applications from little more than comments. But Copilot, just like ChatGPT, has been trained on billions and billions of lines of code written by millions of developers the world over. It can't write anything entirely new but is very good at predicting what needs to be written based on what has come before. Some would even argue that everything has been written before, if not in the same combination. As many have shown, these models are not without their flaws. but that's not the point I want to make.

The limitations of today’s AI when it comes to game testing

When it comes to game testing, more so than any other form of application testing there simply isn't enough data to train a model in order to do anything meaningful. This is why many of the examples of AI and testing have used evolutionary algorithms and reinforcement learning rather than generative algorithms because generative algorithms depend on massive amounts of training data. Evolutionary algorithms are the brute force equivalent of an infinite number of monkeys on an infinite number of typewriters, eventually, you will get the works of Shakespeare. However, those works may be buried in a sea of unusable information, and the amount of computation involved in generating anything useful from an evolutionary algorithm, at least when it comes to testing complex applications such as a game, is going to be expensive. Let me elaborate.

Back in October 2018, we published a blog that touched on the state of AI in relation to game testing at that time. There were articles and offerings from big players like OpenAI and IBM as well as videos from prominent YouTubers such as Sethbling which seem to indicate there were major shifts coming in the way that we played and tested video games. At that time, we predicted the inability of AI to adequately test incredibly complex and interactive projects. What we didn't realize then, is that even an army of individuals would not be completely up to that task. They simply lack the tools or the time to do so. Take for example a title like Cyberpunk 2077. When you multiply a massive world with thousands of objectives, and a nearly infinite number of paths, and toss in support for multiple platforms across older and current-generation hardware, it's no wonder people are looking for a better solution than just adding more hands.

If we were to design the kind of AI solution required to test a game like Cyberpunk 2077, it would require a combination of techniques used for both exploratory testing and generative approaches, as well as a vast sea of existing gameplay data, none of which existed at the time. And it would need to be massively parallel. All of this leaves us with little more than bots that can mindlessly run around in world space interacting with objects clicking on things, accepting quests but never completing them, shooting anything that moves, or simply dying.

Even the most powerful AI tools will still struggle to understand the context of a gaming environment

The problem in gaming is context. Examples such as OpenAI gymnasium demonstrate the ability to play a game given a limited set of actions and a simple observation + reward system. These are often 2D side scrollers or even simpler games like pong where the result is as simple as monitoring that a dot stays alive on the screen. But what these approaches lack is the context of what they're doing, and this can come in one of two ways. Number one, by interpreting what's on the screen at any given time and applying that context to the behavioral output of the agent. And two, by capturing data from the underlying application or engine that will tell us what is happening in the scene when we perform our inputs. Sometimes this comes from visible data such as the score on the screen, and sometimes this comes from underlying game state information. But without context our inputs are meaningless. The AI might be able to navigate through a level to the end after hundreds or thousands of iterations, but that cannot be applied to any sort of testing or outcome-driven objective (i.e. “Finish the main quest line”).

User intent is everything when it comes to finding high-value bugs

So does this mean AI can never be applied to game testing? Absolutely not, but there are challenges that need to be addressed. By their nature, tests compare an expected behavior to an actual result which requires some sense of the intent. Intent in this context refers to what’s happening in the game, but also what experience the designers meant to deliver. Establishing intent on a high level, such as “move right until the player reaches the end” in the MarI/O example is simple enough, but what happens along the way and whether everything is functioning as intended is much more nuanced. What it means is that we need to start capturing the data that will be used to train future algorithms in order to make the dream of AI-driven game testing a reality. This introduces additional problems, such as what telemetry and user context can we capture to provide both meaningful usage and behavior data for one game, but also what can be reused across multiple properties.

Unlike emails, documents, or web pages, video games are rarely similar enough to draw context from one that could be meaningfully applied to another. In a long enough lived project, we might be able to gather enough telemetry and user behavior to determine what types of tests need to be run for future versions, but it would be difficult to the point of impractical to try and derive any meaningful information from a game like World of Warcraft and try to apply that information to drive tests against Cyberpunk 2077.

To say that AI won’t be used for game testing would be akin to predicting the horse-driven wagon would outlive the automobile. There have been several Ph.D. theses written on this subject, and indeed that work seems promising. However, these are based on the assumption that gameplay without context is meaningful in itself. In some use cases, this can be true, such as finding holes in the game world or testing whether the player can navigate within the confines of a procedurally generated environment such as those created in No Man’s Sky. But more meaningful tests such as whether enemies behave as they should when the user is in stealth, or if player damage numbers meet expectations with certain combinations of gear and buffs/debuffs will require the agents to know much more about the intent of the game than is currently possible.

In short, AI will need to walk before it can run. And right now, as an industry, automated game testing is still learning to crawl.

AI as a tool that will complement games testers, not replace them

Here at GameDriver, we believe the future of video game and Immersive Experience testing is a combination of automated functional testing to make sure that all of the features and systems “work” as designed; AI-driven “behavioral testing” to test the bounds of what is possible given a set of rules and conditions; and human-powered experience testing to ensure the intent of the experience is desirable, whether that be for entertainment, social interaction, education, or something entirely new. In this sense, the tester is not the horse being replaced by the car, but the one steering the wagon. AI is simply the engine powering the car that would replace the wagon, as in our previous analogy.

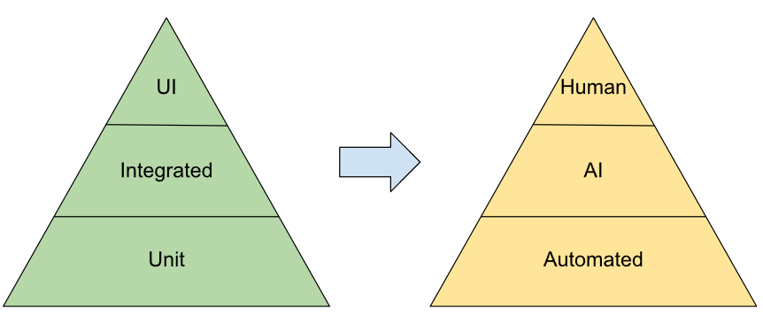

This will change the well-known testing pyramid from one that breaks down the different types of technical tests that are performed, to one that covers the different fundamental aspects of our experiences, including the functionality, limitations, and desired experience of a given application or game. We believe this shift is not only inevitable but necessary for the industry to succeed as it will allow for the delivery of much more complex and high-quality Immersive Experiences.