State of the Game Survey Results

Back in March, we announced a survey to better understand the state of quality in the Gaming and Immersive Experiences industry. In less than 2 months, we received hundreds of (legitimate) responses from developers and testers of various platforms all over the world. As expected, some of the responses to that survey were made by bots. After all, this is a software automation company and we do appreciate a good use of automation when we see it. Who doesn’t?

We saw some interesting trends in the responses, such as who is involved in testing, what platforms they are building for, and the primary challenges they experience in delivering a quality product to their customers. We also learned that most developers and testers are at least aware of the benefits of automation in testing, but that this is very rarely applied consistently. This is one of the driving goals behind everything we do at GameDriver.

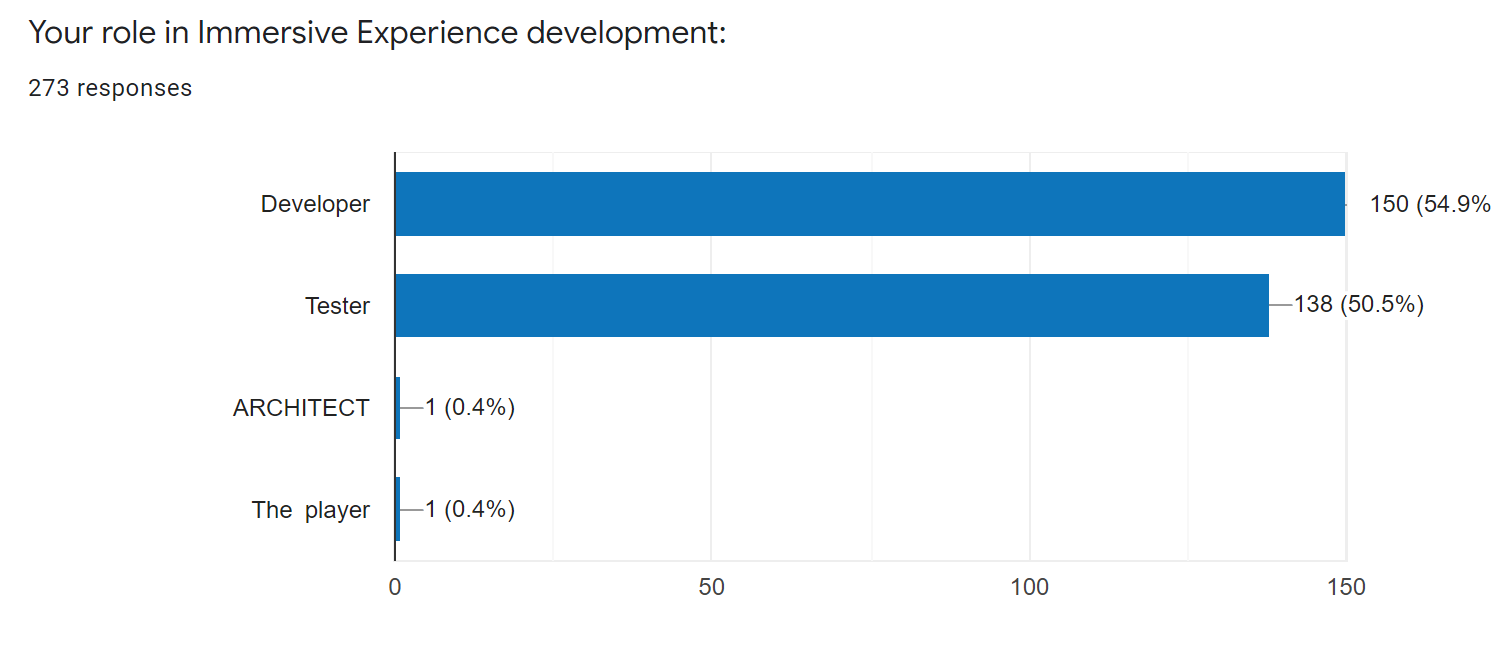

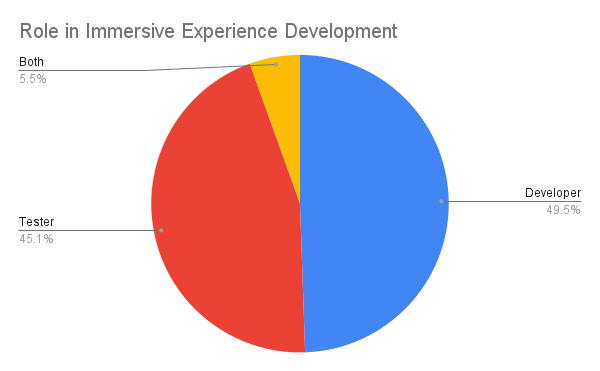

Let’s take a look at some of the data we collected in the survey and what each can tell us about the state of quality, at least among those respondents. First, out of the 273 respondents, we could reasonably identify as being real - because what is “real” anyway? For all we know, we’re all living in a simulation and none of this is “real”... the majority of respondents were from Developers at 54.9% followed by Testers at 50.5%.

But wait a minute, that’s more than 100%? Correct. We allowed both options here and in many questions as it is quite common for people to hold multiple roles in a team. Within the responses, only 15 identified as filling both roles while the majority were still strictly Developers.

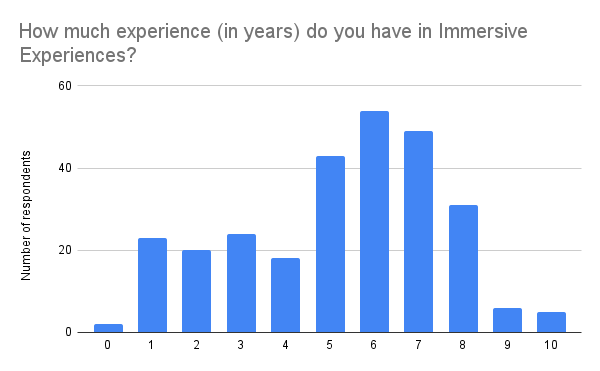

Next, we looked at the number of years of experience. This follows a bell-like curve which is to be expected in such a survey, with the majority falling between 5-8 years. In most environments, this would be considered a “Senior” level role in terms of experience.

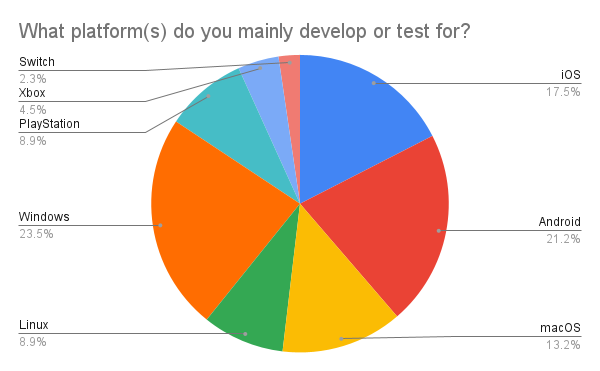

The next question was around the platforms people are developing for, which was also a multiple-choice question since many titles are released on multiple platforms simultaneously. Surprisingly, the majority were building games for Windows, but less surprisingly the next two were Android and iOS. Console development represented less than half of the total.

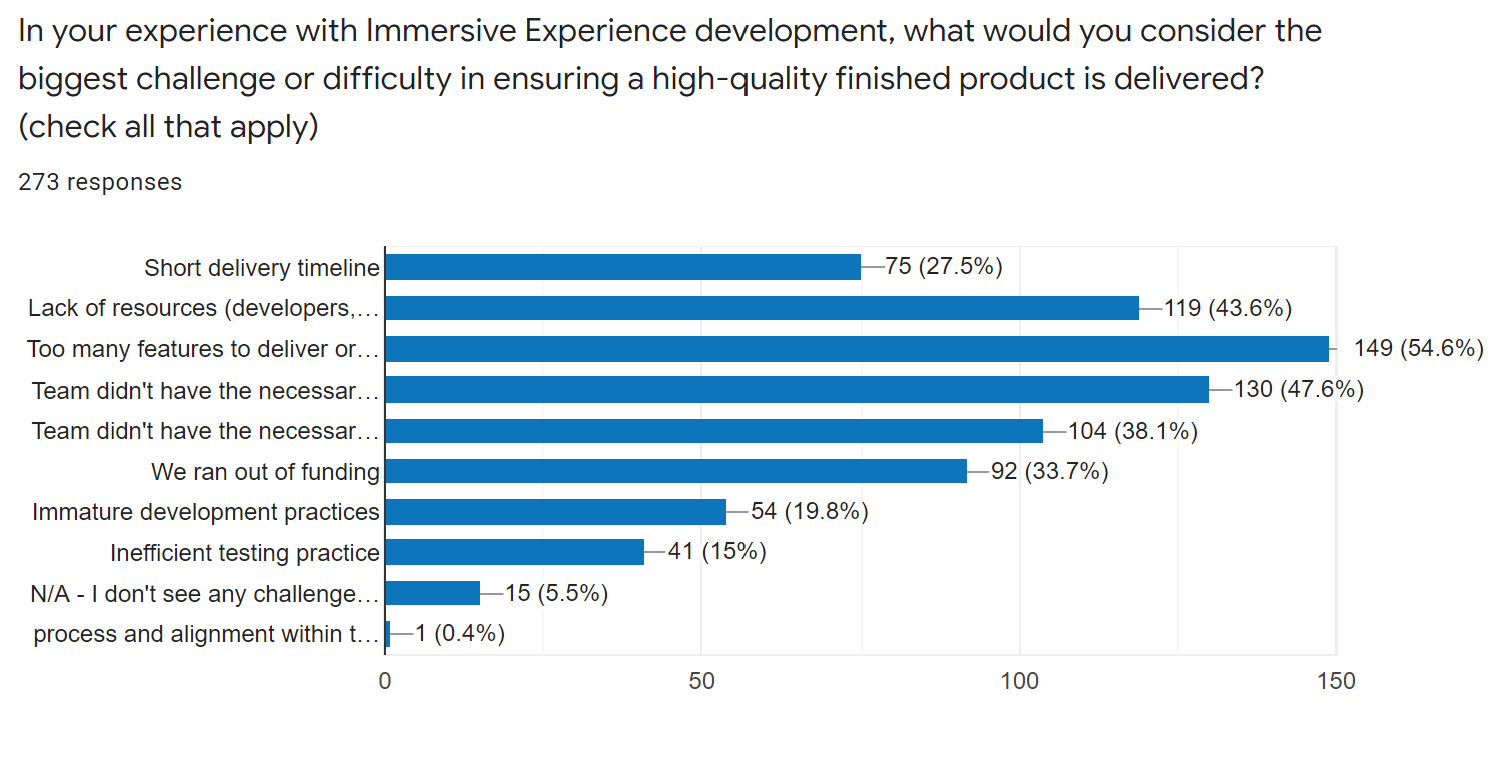

Next, and probably the most interesting area was the question “What would you consider the biggest challenge or difficulty in ensuring a high-quality finished product is delivered?”

The graph above is a little hard to read given the length of the options, so let’s look a little closer. The possible responses included:

- Short delivery timeline

- Lack of resources (developers, testers, etc...)

- Too many features to deliver or test

- The team didn't have the necessary tools

- The team didn't have the necessary skills

- We ran out of funding

- Immature development practices

- Inefficient testing practice

- N/A - I don't see any challenges (woohoo!)

- Other

The top 3 response categories for this question were, in order from greatest to least:

- Too many features to deliver or test: 54.6%

- The team didn't have the necessary tools: 47.6%

- Lack of resources (developers, testers, etc...): 43.6%

With the remaining responses falling below 40%. These three, however, are indicative of the wider problem faced by developers and testers today. Too much to test, and not enough time, resources, or tools to test everything. So we end up prioritizing what is considered important and leaving a lot of potentially experience-breaking bugs undiscovered. These usually become apparent when the product is out the door and in the hands of real users.

Another common cause of churn in development and testing is the need to test the same features every time there is a new build or release. These are usually the same critical areas that are prioritized due to the time and resource constraints mentioned above, or areas that simply get in the way of doing other testing. Often, it makes sense to build shortcuts to get around things like character creation, leveling, etc… sometimes using character data that is reloaded each time a testing cycle begins, but this can present another set of challenges and risks. We won’t go into depth on that subject here, but definitely in a future blog.

Wrapping up this question, we recognize there are likely other challenges that can arise during game development, so we expected to see a lot more additional reasons than we did. The only one added here was “process and alignment within the team”, which is certainly an understandable issue. No matter what your process or direction is, you want everyone pointing in the same direction or it will be very difficult to get where you want to go.

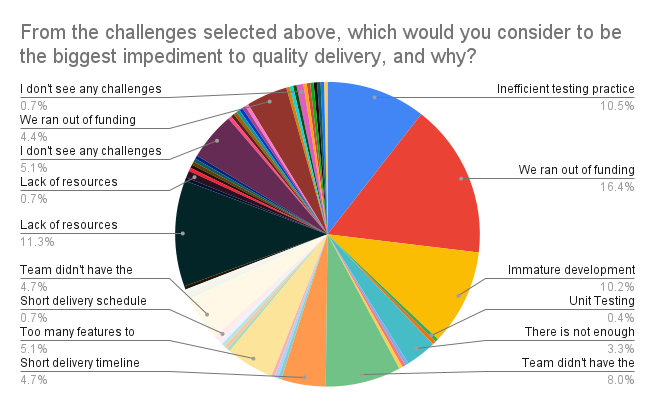

The next question was “From the challenges selected above, which would you consider to be the biggest impediment to quality delivery, and why?”. The highest % of responses indicated funding was an issue, and the next highest pointing to insufficient resources (developers, testers, etc…). These are often related issues, as nearly anything can be achieved with enough people and time, and both are dependent on funding.

The next highest response was referring to an “Inefficient Testing Practice”, with additional comments such as “too expensive to run long automation and manual regression of bugs” that could either fall into the time/resources category or under inefficient practice.

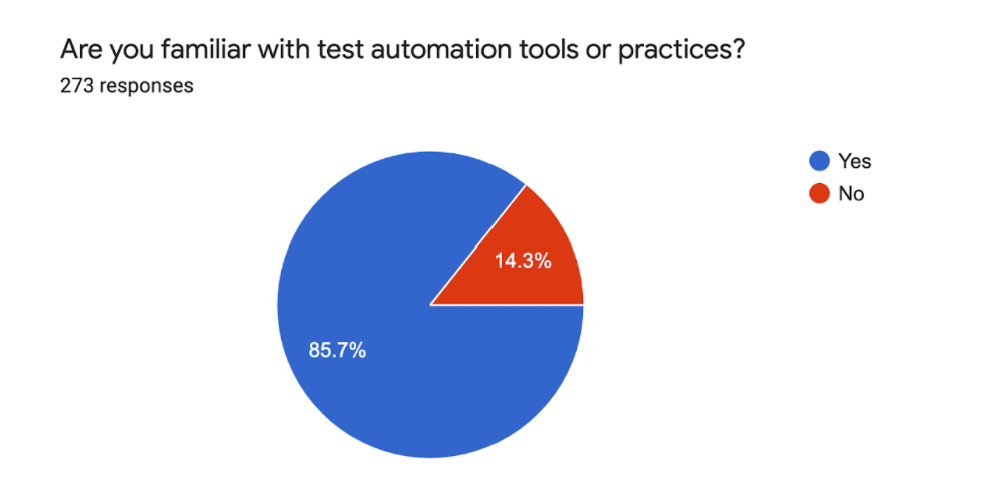

The vast majority of respondents are “familiar with test automation tools or practices”, which is encouraging.

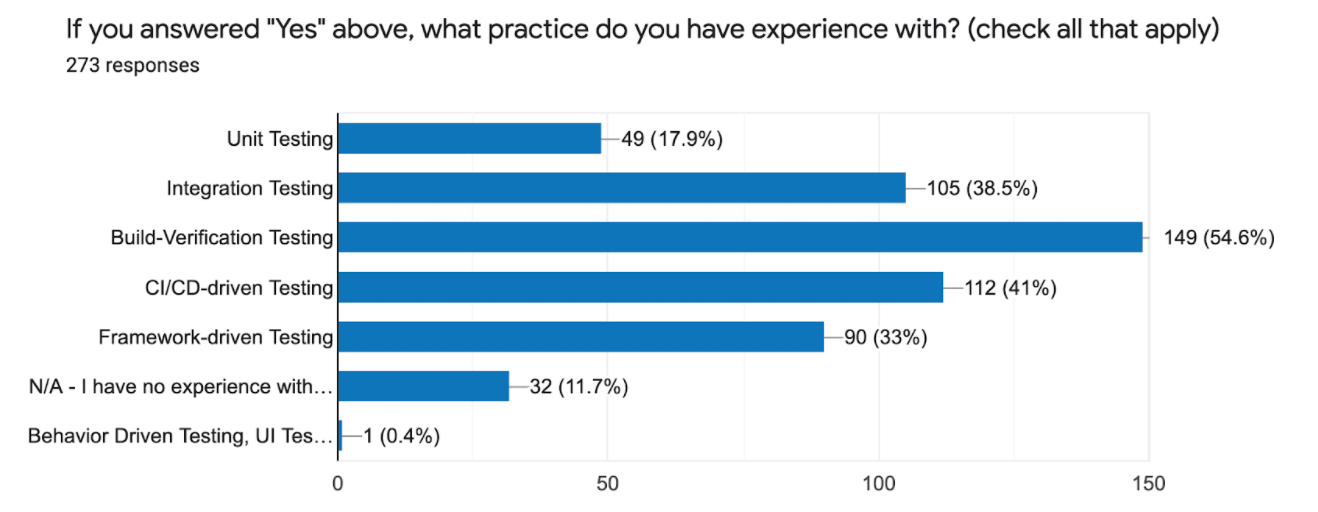

The detailed response to this category shows that while most Dev/Testers have experience with Build-Verification Testing, CI/CD-driven Testing, and Integration Testing, that projects are potentially employing these concepts inconsistently when considered against the previous questions that pointed toward a lack of tooling and resources as a common cause of failure to deliver. This would further suggest that there is at least a relationship between the application of automation concepts and the success of a project, but admittedly it may be too easy to jump to conclusions there ;)

What we can safely say here is that the majority of test automation goes towards Build-Verification Testing, followed by CI/CD-driven Testing (similar to build-verification, but with a focus on changes), and then Integration Testing, but that the % of experience with the former two and remaining categories is still fairly low.

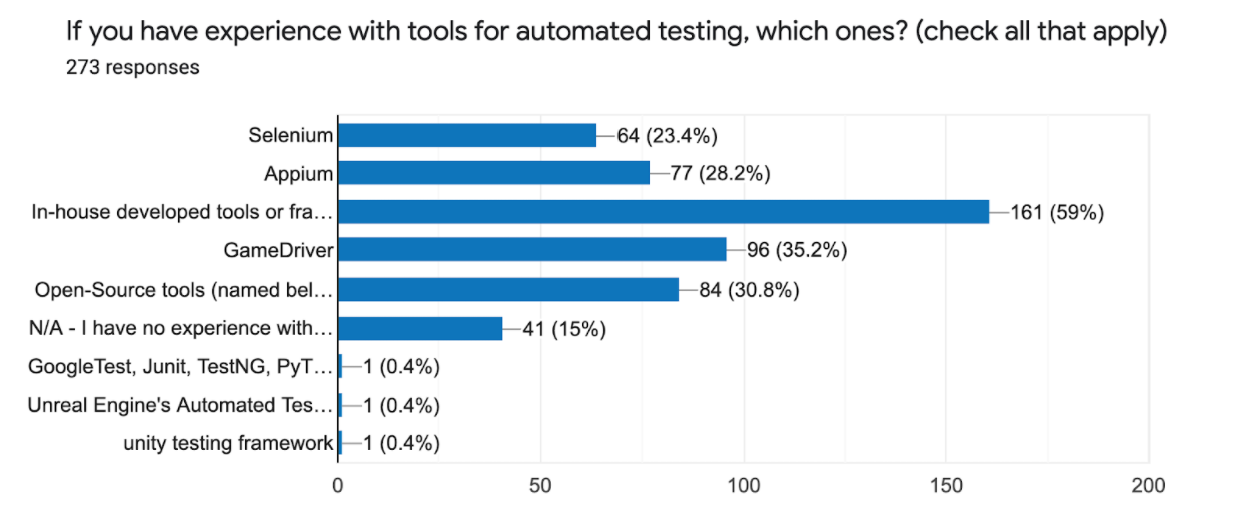

From a tooling perspective, the majority of respondents’ experience is with “in-house developed tools or frameworks”. This is to be expected, as there hasn’t been a standardization effort in this space until recently. The remaining responses are primarily open-source solutions not specifically designed for or supporting game engines. Considering GameDriver is the source of this survey, it’s no surprise that our solution is ranked #2 here. But it’s hard to say how many of those were because we hosted the survey, so best to ignore that ;)

The next question specifically addresses the challenges of developing or maintaining an automated test suite in the context of Immersive Experience development. This is an area we are particularly interested in to help drive the features we invest in for GameDriver, in hopes of easing adoption and enabling teams to take advantage of automation in their projects more easily.

|

If you have experience with automated testing in Immersive Experiences, what would you say were persistent challenges in the development or maintenance of the automation suite? |

# |

% |

|

App Instrumentation: Configuring the application under test for the automation suite |

72 |

26 |

|

Object Identification: Identifying or locating objects during replay |

102 |

37.4 |

|

Scenario Definition: Determining "what to test" |

112 |

40.7 |

|

Ramping up: Learning the necessary skills to effectively work with a given tool |

130 |

47.3 |

|

Script Authoring: Coding or otherwise writing the test for the automation suite to execute |

127 |

46.2 |

|

Test Debugging: Figuring out why a test script isn't working as expected |

83 |

30.4 |

|

Test Flakiness: "It was working on my machine!" |

72 |

26 |

|

Failure Reporting: Figuring out why the test failed |

45 |

16.5 |

|

N/A: I do not have relevant experience with automated testing |

34 |

12.5 |

The majority of respondents indicated that learning the tool and creating automated test scripts represented a significant barrier to success in their automated testing efforts, with determining “what to test” coming in a close 3rd. These top two areas are something GameDriver is acutely aware of, and the reason we built our solution to be familiar to those who have worked with standard tools in other areas such as Web and Mobile. Our API is designed to be immediately useful to anyone with experience using Selenium or Appium, and easy enough for even a novice tester to understand. But this is an area we are always looking to improve upon, and you can expect to see massive improvements in these areas in our latest release. But back to the survey…

The next question was a free-form option where respondents could elaborate on “...the *most difficult* challenge you have had with automated testing?” The responses ranged significantly but were mainly themed around a lack of tooling or process.

Lastly, we asked “What feature(s) or integration(s) would you like to see that would make your job/life easier to deliver a higher-quality finished product?”, which was intended to help us understand which tools we should be working with. The responses were unfortunately not very specific, and we will look to clarify this in future iterations of the survey - possibly by making it multiple choice. However, there were comments here worth sharing, such as:

“An emphasized connection and understanding between QA and their higher up counterparts. It's important for higher-ups' decisions to be informed based on current standings and projections, and important for QA to understand why certain decisions are being made.”

This is essentially referring to the challenge in reporting within an organization, including the reporting of status, success, and failure during development and testing. A common problem when it comes to manual testing since it can be very difficult to quantify the progress and outcomes of your efforts. This contrasted with automated testing, where important information can be easily reported throughout the organization, such as test coverage, completion %, and success or defect escape rates. Without key performance indicators (KPIs) to represent the status of your efforts, decisions can often be made blindly.

Additional comments to this question included:

“E2E test automation standardized”

“I wanted functionality that was compatible with all platforms”

“Automated testing”

“Portable instrumentation layer available for C++”

“Easier to run tests on a completed build, easier to run visual tests on a cloud build server.”

“Multi-platform integrated development software”

We love these responses, of course. These represent what we do at GameDriver.

Conclusion

We’re biased when it comes to the benefits of doing automated testing as part of the application development process. Otherwise, we wouldn’t be in this business. Even so, it seems from the survey results that many of those who responded would agree. In summary, the data would strongly suggest that there are too many features and never enough time, resources, or tools to test everything that is needed.

This is why we believe that automated testing is critical to the success of Immersive Experience delivery today. Without it, teams play a never-ending game of catch-up with features and releases.